Latest

Speculative Decoding Impact on Mixtral Inferenc...

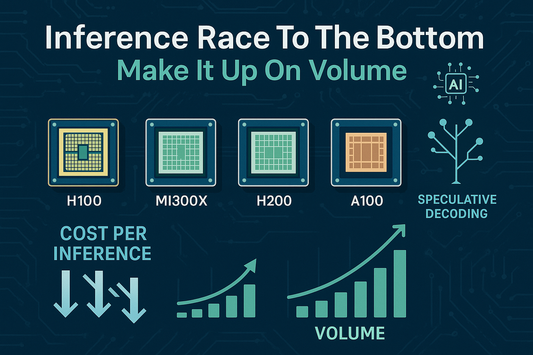

Market Context A growing set of organizations now operate models that surpass GPT-3.5 on various benchmarks (e.g., Mixtral 8x7B, Inflection-2, Claude 2, Gemini Pro, Grok), with more contenders imminent. Pre-training...

Speculative Decoding Impact on Mixtral Inferenc...

Market Context A growing set of organizations now operate models that surpass GPT-3.5 on various benchmarks (e.g., Mixtral 8x7B, Inflection-2, Claude 2, Gemini Pro, Grok), with more contenders imminent. Pre-training...

Performance per Watt: MI300 Versus H100 in Real...

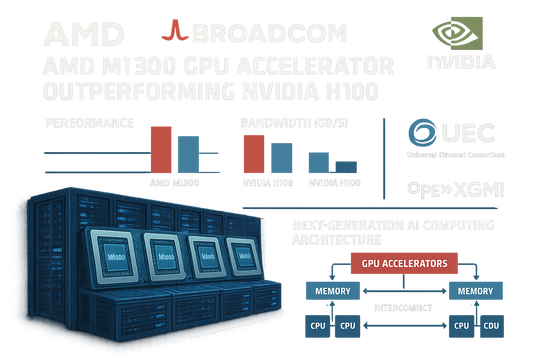

Overview MI300X is now generally available, accompanied by updates on performance, software readiness, and interconnect strategy. The release coincides with expanded collaborations intended to open AMD’s interconnects—both scale-up and scale-out—across...

Performance per Watt: MI300 Versus H100 in Real...

Overview MI300X is now generally available, accompanied by updates on performance, software readiness, and interconnect strategy. The release coincides with expanded collaborations intended to open AMD’s interconnects—both scale-up and scale-out—across...

TCO Modeling for GPU Clouds with PUE Considerat...

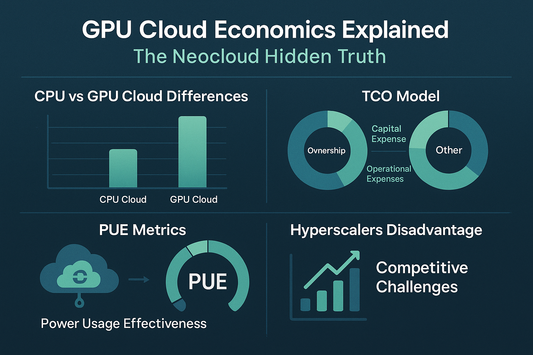

Why Pure-Play GPU Clouds Proliferated Over the past year, pure-play GPU providers have multiplied. The underlying driver is economic: GPU cloud operations are simpler on the software side than general-purpose...

TCO Modeling for GPU Clouds with PUE Considerat...

Why Pure-Play GPU Clouds Proliferated Over the past year, pure-play GPU providers have multiplied. The underlying driver is economic: GPU cloud operations are simpler on the software side than general-purpose...

Next-Generation Microsoft Silicon: Timing, Nami...

Infrastructure Context Microsoft is undertaking an AI infrastructure buildout exceeding $50 billion per year in datacenter capital for 2024 and beyond. While Nvidia GPUs dominate near-term deployments, the plan includes...

Next-Generation Microsoft Silicon: Timing, Nami...

Infrastructure Context Microsoft is undertaking an AI infrastructure buildout exceeding $50 billion per year in datacenter capital for 2024 and beyond. While Nvidia GPUs dominate near-term deployments, the plan includes...

H20 Performance Comparison Against H100 in Chin...

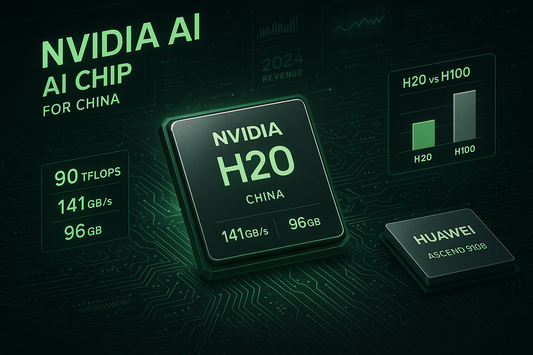

Overview and Export-Control Context New U.S. export rules tightened late-2023 placed limits on peak performance and performance density for AI accelerators shipped to China. Nvidia’s forthcoming H20, L20, and L2...

H20 Performance Comparison Against H100 in Chin...

Overview and Export-Control Context New U.S. export rules tightened late-2023 placed limits on peak performance and performance density for AI accelerators shipped to China. Nvidia’s forthcoming H20, L20, and L2...

Supermicro and Quanta Direct MI300 Orders: Scal...

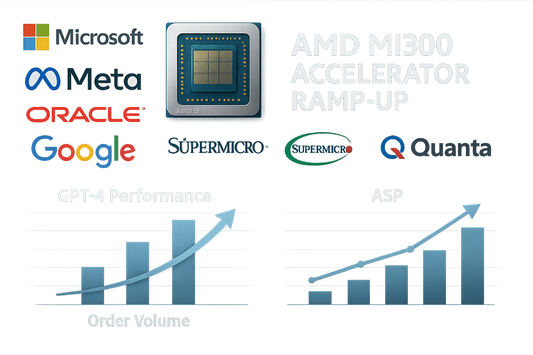

Context and Positioning AMD’s MI300 is positioned as a primary alternative to Nvidia and certain custom hyperscaler accelerators for large language model (LLM) inference, with growing traction supported by investments...

Supermicro and Quanta Direct MI300 Orders: Scal...

Context and Positioning AMD’s MI300 is positioned as a primary alternative to Nvidia and certain custom hyperscaler accelerators for large language model (LLM) inference, with growing traction supported by investments...